PermGen and the Metaspace. He lends a real-world context to these concepts by demonstrating how to hunt for—and fix—a memory leak in a sample Java web application. While Matt works with. This section outlines the process that should be followed when attempting to diagnose a memory leak in your CloudBees CI environment. Most of the information here pertains to Linux environments, but some of it can be applied on Windows systems as well.

- Java Metaspace Memory Leak Detector

- Java Metaspace Memory Leak Detection

- Java Metaspace Gc

- Java Metaspace Dump

- Java Metaspace Size

- Java Metaspace Memory Leaks

- When you are using native code, for example in the format of Type 2 database drivers, then again you are loading code in the native memory. If you are an early adopter of Java 8, you are using metaspace instead of the good old permgen to store class declarations. This is unlimited and in a native part of the JVM.

- In our first instalment of the Memory Series – pt. 1, we reviewed the basics of Java’s Internals, Java Virtual Machine (JVM), the memory regions found within, garbage collection, and how memory leaks might happen in cloud instances.

If your application's execution time becomes longer, or if the operating system seems to be performing slower, this could be an indication of a memory leak. In other words, virtual memory is being allocated but is not being returned when it is no longer needed. Eventually the application or the system runs out of memory, and the application terminates abnormally.

This chapter contains the following sections:

Use JDK Mission Control to Debug Memory Leak

The Flight Recorder records detailed information about the Java runtime and the Java applications running in the Java runtime.

The following sections describe how to debug a memory leak by analyzing a flight recording in JMC.

Detect Memory Leak

You can detect memory leaks early and prevent OutOfmemoryErrors using JMC.

Detecting a slow memory leak can be hard. A typical symptom could be the application becoming slower after running for a long time due to frequent garbage collections. Eventually, OutOfmemoryErrors may be seen. However, memory leaks can be detected early, even before such problems occur, by analyzing Java Flight recordings.

Watch if the live set of your application is increasing over time. The live set is the amount of Java heap that is used after an old collection (all objects that are not live) and have been garbage collected. To inspect the live set, open JMC and connect to a JVM using the Java Management console (JMX). Open the MBean Browser tab and look for the GarbageCollectorAggregator MBean under com.sun.management.

Open JMC and start a Time fixed recording (profiling recording) for an hour. Before starting a flight recording, make sure that the option Object Types + Allocation Stack Traces + Path to GC Root is selected from the Memory Leak Detection setting.

Once the recording is complete, the recording file (.jfr) opens in JMC. Look at the Automated Analysis Results page. To detect a memory leak focus on the Live Objects section of the page. Here is a sample figure of a recording, which shows heap size issue:

Figure 3-1 Memory Leak - Automated Analysis Page

Description of 'Figure 3-1 Memory Leak - Automated Analysis Page'

You can observe that in the Heap Live Set Trend section, the live set on the heap seems to increase rapidly and the analysis of the reference tree detected a leak candidate.

For further analysis, open the Java Applications page and then click the Memory page. Here is a sample figure of a recording, which shows memory leak issue.

Figure 3-2 Memory Leak - Memory Page

Description of 'Figure 3-2 Memory Leak - Memory Page'

You can observe from the graph that the memory usage has increased steadily, which indicates a memory leak issue.

Find the Leaking Class

You can use the Java Flight Recordings to identify the leaking class.

To find the leaking class, open the Memory page and click the Live Objects page. Here is a sample figure of a recording, which shows the leaking class.

Figure 3-3 Memory Leak - Live Objects Page

Description of 'Figure 3-3 Memory Leak - Live Objects Page'

You can observe that most of the live objects being tracked are actually held on to by Leak$DemoThread, which in turn holds on to a leaked char[] class. For further analysis, see the Old Object Sample event in the Results tab that contains sampling of the objects that have survived. This event contains the time of allocation, the allocation stack trace, the path back to the GC root.

When a potentially leaking class is identified, look at the TLAB Allocations page in the JVM Internals page for some samples of where objects were allocated. Here is a sample figure of a recording, which shows TLAB allocations.

Figure 3-4 Memory Leak - TLAB Allocations

Description of 'Figure 3-4 Memory Leak - TLAB Allocations'

Check the class samples being allocated. If the leak is slow, there may be a few allocations of this object and may be no samples. Also, it may be that only a specific allocation site is leading to a leak. You can make required changes to the code to fix the leaking class.

Use java and jcmd commands to Debug a Memory Leak

Flight Recorder records detailed information about the Java runtime and the Java application running in the Java runtime. This information can be used to identify memory leaks.

Detecting a slow memory leak can be hard. A typical symptom is that the application becomes slower after running for a long time due to frequent garbage collections. Eventually, OutOfMemoryErrors may be seen.

To detect a memory leak, Flight Recorder must be running at the time that the leak occurs. The overhead of Flight Recorder is very low, less than 1%, and it has been designed to be safe to have always on in production.

Start a recording when the application is started using the java command as shown in the following example:

When the JVM runs out of memory and exits due to an OutMemoryError, a recording with the prefix hs_oom_pid is often, but not always, written to the directory in which the JVM was started. An alternative way to get a recording is to dump it before the application runs out of memory using the jcmd tool, as shown in the following example:

When you have a recording, use the jfr tool located in the java-home/bin directory to print Old Object Sample events that contain information about potential memory leaks. The following example shows the command and an example of the output from a recording for an application with the pid 16276:

To identify a possible memory leak, review the following elements in the recording:

First, notice that the

lastKnownHeapUsageelement in the Old Object Sample events is increasing over time, from 63.9 MB in the first event in the example to 121.7 MB in the last event. This increase is an indication that there is a memory leak. Most applications allocate objects during startup and then allocate temporary objects that are periodically garbage collected. Objects that are not garbage collected, for whatever reason, accumulate over time and increases the value oflastKnownHeapUsage.Next, look at the

allocationTimeelement to see when the object was allocated. Objects that are allocated during startup are typically not memory leaks, neither are objects allocated close to when the dump was taken. TheobjectAgeelement shows for how long the object has been alive. ThestartTimeanddurationelements are not related to when the memory leak occurred, but when theOldObjectevent was emitted and how long it took to gather data for it. This information can be ignored.Then look at the

objectelement to see the memory leak candidate; in this example, an object of type java.util.HashMap$Node. It is held by thetablefield in the java.util.HashMap class, which is held by java.util.HashSet, which in turn is held by theusersfield of the Application class.The

rootelement contains information about the GC root. In this example, the Application class is held by a stack variable in the main thread. TheeventThreadelement provides information about the thread that allocated the object.

If the application is started with the -XX:StartFlightRecording:settings=profile option, then the recording also contains the stack trace from where the object was allocated, as shown in the following example:

In this example we can see that the object was put in the HashSet when the storeUser(String, String) method was called. This suggests that the cause of the memory leak might be objects that were not removed from the HashSet when the user logged out.

It is not recommended to always run all applications with the -XX:StartFlightRecording:settings=profile option due to overhead in certain allocation-intensive applications, but is typically OK when debugging. Overhead is usually less than 2%.

Setting path-to-gc-roots=true creates overhead, similar to a full garbage collection, but also provides reference chains back to the GC root, which is usually sufficient information to find the cause of a memory leak.

Understand the OutOfMemoryError Exception

java.lang.OutOfMemoryError error is thrown when there is insufficient space to allocate an object in the Java heap.

One common indication of a memory leak is the java.lang.OutOfMemoryError exception. In this case, the garbage collector cannot make space available to accommodate a new object, and the heap cannot be expanded further. Also, this error may be thrown when there is insufficient native memory to support the loading of a Java class. In a rare instance, a java.lang.OutOfMemoryError can be thrown when an excessive amount of time is being spent doing garbage collection, and little memory is being freed.

When a java.lang.OutOfMemoryError exception is thrown, a stack trace is also printed.

The java.lang.OutOfMemoryError exception can also be thrown by native library code when a native allocation cannot be satisfied (for example, if swap space is low).

An early step to diagnose an OutOfMemoryError exception is to determine the cause of the exception. Was it thrown because the Java heap is full, or because the native heap is full? To help you find the cause, the text of the exception includes a detail message at the end, as shown in the following exceptions:

Cause: The detailed message Java heap space indicates that an object could not be allocated in the Java heap. This error does not necessarily imply a memory leak. The problem can be as simple as a configuration issue, where the specified heap size (or the default size, if it is not specified) is insufficient for the application.

In other cases, and in particular for a long-lived application, the message might be an indication that the application is unintentionally holding references to objects, and this prevents the objects from being garbage collected. This is the Java language equivalent of a memory leak. Note: The APIs that are called by an application could also be unintentionally holding object references.

One other potential source of this error arises with applications that make excessive use of finalizers. If a class has a finalize method, then objects of that type do not have their space reclaimed at garbage collection time. Instead, after garbage collection, the objects are queued for finalization, which occurs at a later time. In the Oracle Sun implementation, finalizers are executed by a daemon thread that services the finalization queue. If the finalizer thread cannot keep up with the finalization queue, then the Java heap could fill up, and this type of OutOfMemoryError exception would be thrown. One scenario that can cause this situation is when an application creates high-priority threads that cause the finalization queue to increase at a rate that is faster than the rate at which the finalizer thread is servicing that queue.

Action: To know more about how to monitor objects for which finalization is pending Monitor the Objects Pending Finalization.

Cause: The detail message 'GC overhead limit exceeded' indicates that the garbage collector is running all the time, and the Java program is making very slow progress. After a garbage collection, if the Java process is spending more than approximately 98% of its time doing garbage collection and if it is recovering less than 2% of the heap and has been doing so for the last 5 (compile time constant) consecutive garbage collections, then a java.lang.OutOfMemoryError is thrown. This exception is typically thrown because the amount of live data barely fits into the Java heap having little free space for new allocations.

Action: Increase the heap size. The java.lang.OutOfMemoryError exception for GC Overhead limit exceeded can be turned off with the command-line flag -XX:-UseGCOverheadLimit.

Cause: The detail message 'Requested array size exceeds VM limit' indicates that the application (or APIs used by that application) attempted to allocate an array that is larger than the heap size. For example, if an application attempts to allocate an array of 512 MB, but the maximum heap size is 256 MB, then OutOfMemoryError will be thrown with the reason “Requested array size exceeds VM limit.'

Action: Usually the problem is either a configuration issue (heap size too small) or a bug that results in an application attempting to create a huge array (for example, when the number of elements in the array is computed using an algorithm that computes an incorrect size).

Cause: Java class metadata (the virtual machines internal presentation of Java class) is allocated in native memory (referred to here as metaspace). If metaspace for class metadata is exhausted, a java.lang.OutOfMemoryError exception with a detail MetaSpace is thrown. The amount of metaspace that can be used for class metadata is limited by the parameter MaxMetaSpaceSize, which is specified on the command line. When the amount of native memory needed for a class metadata exceeds MaxMetaSpaceSize, a java.lang.OutOfMemoryError exception with a detail MetaSpace is thrown.

Action: If MaxMetaSpaceSize, has been set on the command-line, increase its value. MetaSpace is allocated from the same address spaces as the Java heap. Reducing the size of the Java heap will make more space available for MetaSpace. This is only a correct trade-off if there is an excess of free space in the Java heap. See the following action for Out of swap space detailed message.

Cause: The detail message 'request size bytes for reason. Out of swap space?' appears to be an OutOfMemoryError exception. However, the Java HotSpot VM code reports this apparent exception when an allocation from the native heap failed and the native heap might be close to exhaustion. The message indicates the size (in bytes) of the request that failed and the reason for the memory request. Usually the reason is the name of the source module reporting the allocation failure, although sometimes it is the actual reason.

Action: When this error message is thrown, the VM invokes the fatal error handling mechanism (that is, it generates a fatal error log file, which contains useful information about the thread, process, and system at the time of the crash). In the case of native heap exhaustion, the heap memory and memory map information in the log can be useful. See Fatal Error Log.

If this type of the OutOfMemoryError exception is thrown, you might need to use troubleshooting utilities on the operating system to diagnose the issue further. See Native Operating System Tools.

Cause: On 64-bit platforms, a pointer to class metadata can be represented by 32-bit offset (with UseCompressedOops). This is controlled by the command line flag UseCompressedClassPointers (on by default). If the UseCompressedClassPointers is used, the amount of space available for class metadata is fixed at the amount CompressedClassSpaceSize. If the space needed for UseCompressedClassPointers exceeds CompressedClassSpaceSize, a java.lang.OutOfMemoryError with detail Compressed class space is thrown.

Action: Increase CompressedClassSpaceSize to turn off UseCompressedClassPointers. Note: There are bounds on the acceptable size of CompressedClassSpaceSize. For example -XX: CompressedClassSpaceSize=4g, exceeds acceptable bounds will result in a message such as

CompressedClassSpaceSize of 4294967296 is invalid; must be between 1048576 and 3221225472.

Note:

There is more than one kind of class metadata,–klass metadata, and other metadata. Only klass metadata is stored in the space bounded by CompressedClassSpaceSize. The other metadata is stored in Metaspace. Cause: If the detail part of the error message is 'reasonstack_trace_with_native_method, and a stack trace is printed in which the top frame is a native method, then this is an indication that a native method, has encountered an allocation failure. The difference between this and the previous message is that the allocation failure was detected in a Java Native Interface (JNI) or native method rather than in the JVM code.

Action: If this type of the OutOfMemoryError exception is thrown, you might need to use native utilities of the OS to further diagnose the issue. See Native Operating System Tools.

Troubleshoot a Crash Instead of OutOfMemoryError

Use the information in the fatal error log or the crash dump to troubleshoot a crash.

Sometimes an application crashes soon after an allocation from the native heap fails. This occurs with native code that does not check for errors returned by the memory allocation functions.

Java Metaspace Memory Leak Detector

For example, the malloc system call returns null if there is no memory available. If the return from malloc is not checked, then the application might crash when it attempts to access an invalid memory location. Depending on the circumstances, this type of issue can be difficult to locate.

However, sometimes the information from the fatal error log or the crash dump is sufficient to diagnose this issue. The fatal error log is covered in detail in Fatal Error Log. If the cause of the crash is an allocation failure, then determine the reason for the allocation failure. As with any other native heap issue, the system might be configured with the insufficient amount of swap space, another process on the system might be consuming all memory resources, or there might be a leak in the application (or in the APIs that it calls) that causes the system to run out of memory.

Diagnose Leaks in Java Language Code

Use the NetBeans profiler to diagnose leaks in the Java language code.

Diagnosing leaks in the Java language code can be difficult. Usually, it requires very detailed knowledge of the application. In addition, the process is often iterative and lengthy. This section provides information about the tools that you can use to diagnose memory leaks in the Java language code.

Note:

Beside the tools mentioned in this section, a large number of third-party memory debugger tools are available. The Eclipse Memory Analyzer Tool (MAT), and YourKit (www.yourkit.com) are two examples of commercial tools with memory debugging capabilities. There are many others, and no specific product is recommended.

The following utilities used to diagnose leaks in the Java language code.

- The NetBeans Profiler: The NetBeans Profiler can locate memory leaks very quickly. Commercial memory leak debugging tools can take a long time to locate a leak in a large application. The NetBeans Profiler, however, uses the pattern of memory allocations and reclamations that such objects typically demonstrate. This process includes also the lack of memory reclamations. The profiler can check where these objects were allocated, which often is sufficient to identify the root cause of the leak.

The following sections describe the other ways to diagnose leaks in the Java language code.

Get a Heap Histogram

Get a heap histogram to identify memory leaks using the different commands and options available.

You can try to quickly narrow down a memory leak by examining the heap histogram. You can get a heap histogram in several ways:

- If the Java process is started with the

-XX:+PrintClassHistogramcommand-line option, then the Control+Break handler will produce a heap histogram. - You can use the

jmaputility to get a heap histogram from a running process:It is recommended to use the latest utility,

jcmd, instead ofjmaputility for enhanced diagnostics and reduced performance overhead. See Useful Commands for the jcmd Utility.The command in the following example creates a heap histogram for a running process usingjcmdand results similar to the followingjmapcommand.The output shows the total size and instance count for each class type in the heap. If a sequence of histograms is obtained (for example, every 2 minutes), then you might be able to see a trend that can lead to further analysis.

- You can use the

jhsdb jmaputility to get a heap histogram from a core file, as shown in the following example.For example, if you specify the

-XX:+CrashOnOutOfMemoryErrorcommand-line option while running your application, then when anOutOfMemoryErrorexception is thrown, the JVM will generate a core dump. You can then executejhsdb jmapon the core file to get a histogram, as shown in the following example.The above example shows that the

OutOfMemoryErrorexception was caused by the number ofbytearrays (2108 instances in the heap). Without further analysis it is not clear where the byte arrays are allocated. However, the information is still useful.

Monitor the Objects Pending Finalization

Different commands and options available to monitor the objects pending finalization.

When the OutOfMemoryError exception is thrown with the 'Java heap space' detail message, the cause can be excessive use of finalizers. To diagnose this, you have several options for monitoring the number of objects that are pending finalization:

- The JConsole management tool can be used to monitor the number of objects that are pending finalization. This tool reports the pending finalization count in the memory statistics on the Summary tab pane. The count is approximate, but it can be used to characterize an application and understand if it relies a lot on finalization.

- On Linux operating systems, the

jmaputility can be used with the-finalizerinfooption to print information about objects awaiting finalization. - An application can report the approximate number of objects pending finalization using the

getObjectPendingFinalizationCountmethod of thejava.lang.management.MemoryMXBeanclass. Links to the API documentation and example code can be found in Custom Diagnostic Tools. The example code can easily be extended to include the reporting of the pending finalization count.

Diagnose Leaks in Native Code

Several techniques can be used to find and isolate native code memory leaks. In general, there is no ideal solution for all platforms.

The following are some techniques to diagnose leaks in native code.

Track All Memory Allocation and Free Calls

Tools available to track all memory allocation and use of that memory.

A very common practice is to track all allocation and free calls of the native allocations. This can be a fairly simple process or a very sophisticated one. Many products over the years have been built up around the tracking of native heap allocations and the use of that memory.

Tools like IBM Rational Purify can be used to find these leaks in normal native code situations and also find any access to native heap memory that represents assignments to un-initialized memory or accesses to freed memory.

Not all these types of tools will work with Java applications that use native code, and usually these tools are platform-specific. Because the virtual machine dynamically creates code at runtime, these tools can incorrectly interpret the code and fail to run at all, or give false information. Check with your tool vendor to ensure that the version of the tool works with the version of the virtual machine you are using.

See sourceforge for many simple and portable native memory leak detecting examples. Most libraries and tools assume that you can recompile or edit the source of the application and place wrapper functions over the allocation functions. The more powerful of these tools allow you to run your application unchanged by interposing over these allocation functions dynamically.

Track All Memory Allocations in the JNI Library

If you write a JNI library, then consider creating a localized way to ensure that your library does not leak memory, by using a simple wrapper approach.

The procedure in the following example is an easy localized allocation tracking approach for a JNI library. First, define the following lines in all source files.

Then, you can use the functions in the following example to watch for leaks.

The JNI library would then need to periodically (or at shutdown) check the value of the total_allocated variable to verify that it made sense. The preceding code could also be expanded to save in a linked list the allocations that remained, and report where the leaked memory was allocated. This is a localized and portable way to track memory allocations in a single set of sources. You would need to ensure that debug_free() was called only with the pointer that came from debug_malloc(), and you would also need to create similar functions for realloc(), calloc(), strdup(), and so forth, if they were used.

A more global way to look for native heap memory leaks involves interposition of the library calls for the entire process.

Track Memory Allocation with Operating System Support

Tools available for tracking memory allocation in an operating system.

Most operating systems include some form of global allocation tracking support.

- On Windows, search Microsoft Docs for debug support. The Microsoft C++ compiler has the

/Mdand/Mddcompiler options that will automatically include extra support for tracking memory allocation. - Linux systems have tools such as

mtraceandlibnjamdto help in dealing with allocation tracking.

September 02, 2020

Disclaimer: This blog post is part of the BellSoft Guest Author Program where BellSoft invites experts to share their insights on the IT industry. The views and opinions expressed in this blog post are those of the authors and do not necessarily reflect the official policy or position of BellSoft. Any content provided by our bloggers or authors are of their opinion and are not intended to malign any religion, ethnic group, club, organization, company, individual or anyone or anything.

In this post about JDK Flight Recorder and Mission Control, a powerful diagnostic and profiling tool built into OpenJDK, I would like to focus on JVM memory features.

What are the memory problems?

If you put the words “garbage collection” and “problem” into one sentence, many would think of the infamous Stop-the-World pauses that JVM is prone to trigger. Lengthy Stop-the-World pauses are indeed very problematic from an application perspective.

While garbage collection (GC) and Stop-the-World (STW) pauses are connected, they are not the same. The problem with GC is likely to manifest itself as prolonged STW pauses, but GC does not necessarily cause them.

Java Metaspace Memory Leak Detection

General optimization of application code allocation patterns is another standard task, and Flight Recorder with Mission Control could be of great help here. Optimized memory allocation in application code suits both for GC and overall execution performance.

Finally, the application code may have memory leaks. Though I believe heap dumps are the best tool for diagnosing memory leaks, JDK Flight Recorder offers an alternative approach with its pros and cons. In particular, heap dumps require Stop-the-World heap inspection to capture and tend to be extensive (depending on the heap size), while JFR files do not grow proportionally to the heap size.

Below is a plan for the rest of this article.

- Garbage collection related reports

- JVM Operations aka the Stop-the-World pause report

- Memory allocation samling

- Old object sampling overview

Starting memory profiling JDK Flight Recorder session

You would need OpenJDK with JDK Flight Recorder support and Mission Control. I was using Liberica OpenJDK 11.0.7+10 and Liberica Mission Control 7.1.1.

There are multiple ways to start a JDK Flight Recorder session. You can do it with Mission Control UI (locally or via JMX), with jcmd from console, or even configure it to auto-start with JVM command-line arguments.

All these options are covered in my previous post, so I will omit the details here and jump right to Flight Recorder session settings.

The configuration dialog of the Flight Recorder session in Mission Control has a few options relevant to the features I am going to explain later.

Garbage Collector

The default level is “Normal,” and it should be enough. You may choose “All,” which adds more details about GC Phases.

Memory Profiling

Here you may choose one of three options:

- Off

- Object Allocation and Promotion

- All, including Heap Statistics

I strongly suggest you stay with “Object Allocation and Promotion.”

It provides valuable data points for allocation profiling with low overhead.

Heap Statistics included in the last option force regular Stop-the-World heap inspections which could be quite lengthy.

Memory Leak Detection

If you suspect memory leaks in your application, tweak these options. You need to choose the “Object Types + Allocation Stack Traces + Path to GC Root” level of details to see the leaking path in the report afterward. Calculating “Path to GC Root” is a moderately expensive Stop-the-World operation, and I would not recommend enabling it just as a precaution.

Garbage collection in OpenJDK

OpenJDK JVM can use different implementations of garbage collectors.

The most commonly used ones in HotSpot JVM are

- Garbage First

- Parallel Mark Sweep Compact

- Concurrent Mark Sweep (CMS) — discontinued in Java 14

There are several other algorithms available in various JDKs, but I am not going to get too deep for the sake of brevity.

Each GC has its unique features, but a few are the same.

All the algorithms above are generational, meaning they split available heap space as separate young and old space. The result is three types of GC cycle:

- Minor (young) collections — recycle only young space

- Major (old) collections — recycle old and young space

- Last resort full collections — a big STW whole heap collection you want to avoid

Each GC cycle is split into a hierarchy of phases. Some could be concurrent (executing in parallel with application code), but most require a Stop-the-World pause.

Garbage collector report

Mission Control has a generic “Garbage Collections” report agnostic of the GC algorithm used by JVM.

This report shows a list of GC pauses with break down by phases (table on the right).

Information in this report could replace the typical usage of GC logs. Here you can quickly spot the longest GC pause and dig a little deeper into its details. Notice GC ID assigned to each GC cycle: they are useful to pick additional events related to GC but not included in this report.

Two key metrics could be used to assess GC performance regardless of the algorithm:

- Max duration of a Stop-the-World pause — it may add up to your application’s request processing time, hence increasing response time.

- Percentage of time spent in a Stop-the-World pause during a certain period — e.g., if we spent 6 seconds per minute in GC, the application can utilize 90% of available CPU resources at most.

The chart on the “Garbage collection” report helps to spot both metrics easily. “Longest pause” and “Sum of pauses” visualize these metrics aggregated over regular time buckets (2 minutes in the screenshot).

“Longest pause” shows the longest GC pause in the bucket, so you can observe your regular long pauses and outliers, if applicable.

“Sum of pauses” shows the sum of all GC pauses in the bucket. Use this metric to derive the percentage of time spent on GC pauses. Hover your mouse over the bar, and you will see the exact numbers in the tooltip.

In the example screenshot, the sum of pauses over 2 minutes equals 4 seconds. GC overhead is 4 / 120 ≈ 3.3%, which is reasonable.

What if you are not happy with your GC performance?

GC tuning is a big topic out of scope for this article, but I will highlight a few typical cases.

Java Metaspace Gc

‘Heap is too small’ problem

GC in modern JVM usually just works. A lot of engineering effort has gone into making GC adaptable for the application needs. Although GC is not magic, it needs enough memory to accommodate application live data sets and some head room to work efficiently.

If the heap size is not enough, you will see increased GC overhead (percentage of time spent on GC pauses) and, as a consequence, the application slowing down.

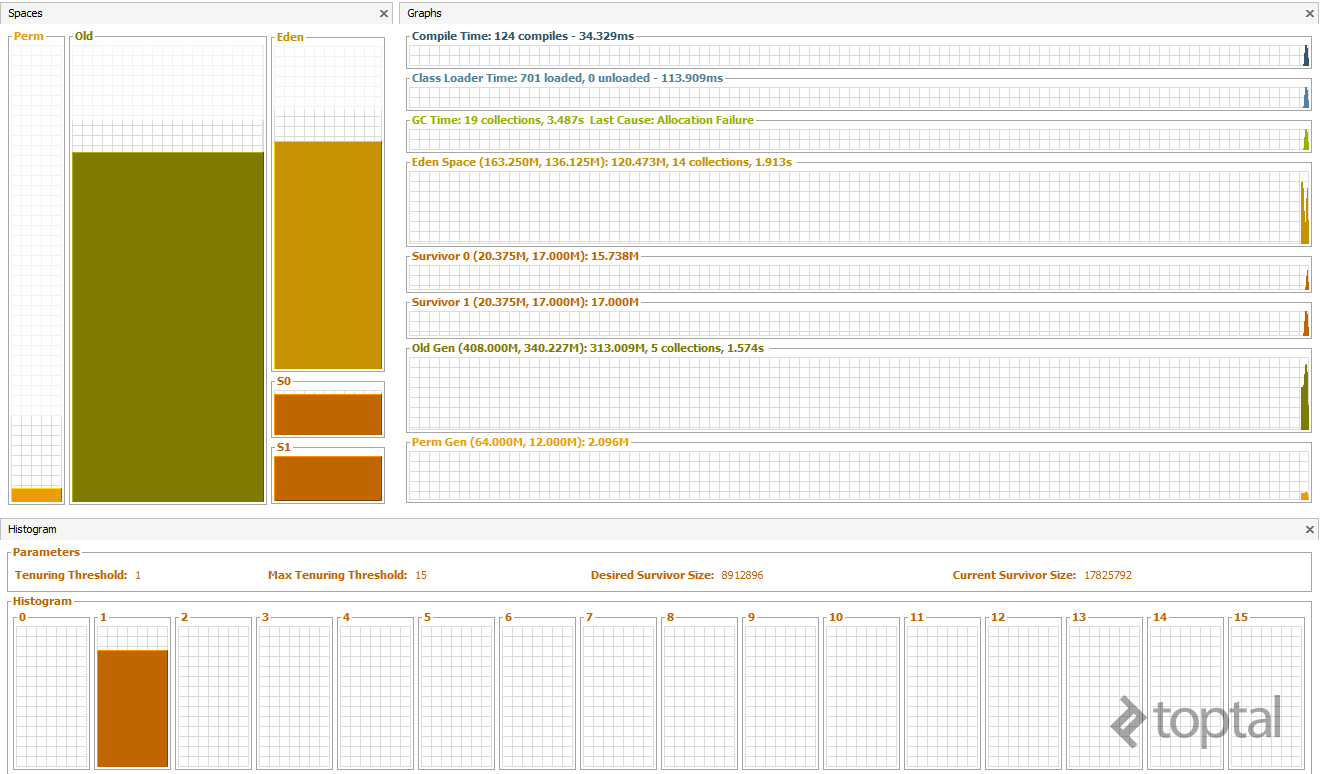

The screenshot above illustrates JVM running on low memory.

During the hovered time bucket, 1.4 seconds out of 2, JVM was in a GC pause, which is an absolutely unhealthy proportion.

Often increasing the max heap allowed to JVM solves the problem with high GC overhead. However, if an application has a memory leak, increasing heap size is no help. The leak in such a situation needs to be fixed.

‘Metaspace is too small’ problem

Metaspace is a memory area to store class metadata. Starting from Java SE 8, metaspace has not been part of heap, even though the lack of available space in metaspace may cause GC there.

To free some memory in metaspace, JVM needs to unload unused classes. Class unloading, in turn, requires a major GC on the heap. The reason is that objects on the heap keep references to corresponding classes, and a class cannot be unloaded until any instance of it exists in the heap.

You would quickly spot the metaspace keyword in the “Cause” column in this case. You can also view the metaspace size in the timeline chart at the bottom of the report to check if you are hitting the limit.

Special reference abuse

Java™ offers utility classes that have very specific semantics related to garbage collection. These are weak, soft and phantom references. Besides, JVM is using finalizer references internally to implement the semantics of finalizers.

All these references require particular processing within the bounds of a GC pause. Due to semantic special references, they can only be processed after all live objects are found.

Usually, this processing runs fast, but abusing these special references (especially finalizers as they are most expensive) could cause abnormally long pauses for otherwise healthy GC.

Columns with a count of each special reference type are on the right side in the GC event table. Numbers on the order of tens of thousands could indicate a problem.

You can also find the reference processing phase in the “Pause Phases” table and see how much time was spent on it.

More GC related events

The “Garbage Collections” report is a handy tool to spot standard problems, but it does not display all GC details available from JDK Flight Recorder.

As usual, you can get to the event browser and look at specific events directly. Look under Java Virtual Machine / GC / Details for more diagnostic data related to garbage collection.

Besides work done under Stop-the-World, some collectors (G1 in particular) use background threads working in parallel with application threads to assist in memory reclamation. You may find details for such tasks if you look at the “GC Phase Concurrent” event type.

Stop-the-World pauses in OpenJDK

A Stop-the-World (STW) pause is a state of JVM when all application threads are frozen, and internal JVM routines have exclusive access to the process memory.

Hotspot JVM has a protocol called safepoint to ensure proper Stop-the-World pauses.

STW pauses are mainly associated with GC activity, but it is but one possible reason. In Hotspot JVM, STW pauses get involved in other special JVM operations.

Namely:

- JIT compiler related operations (e.g. deoptimization or OSR)

- Bias lock revocation

- Thread dumps and other diagnostic operations, including JFR-specific ones

As a rule, STW pauses are unnoticeably short (less than a millisecond), but putting JVM on a safepoint is a sophisticated process. Things can go wrong here.

In the previous post, I mentioned the safepoint bias. Safepoint protocol requires each thread executing Java code to explicitly put itself in a “safe” state, where JVM knows exactly how local variables are stored in the memory on the stack.

After it starts, each thread running Java code receives a signal to put itself on the safepoint. A JVM operation cannot begin until all threads have confirmed their safe state (threads executing natve code via JNI are an exception; STW does not stop them unless they try to access heap to switch from native code to Java).

If one or more threads are slow at reaching the safe state, the rest of the JVM will wait for them to be frozen effectively.

There are two main reasons for such misbehavior:

- A thread is blocked while accessing a memory page (d state in Linux) and cannot react

- A thread is stuck in a hot loop, where the JIT compiler omitted a safepoint check as it was considered too fast of a loop.

The first situation may happen if the system is swapping or application code is using memory-mapped IO. The second one may occur in heavily optimized computation-intensive code (in Java runtime, java.lang.Sting and java.util.BigDecimal operations over large objects are common culprits).

VM Operations report

Mission Control has a report with a summary of all Stop-The-World VM operations.

This report shows a summary grouped by type of operations. In the screenshot, “CGC_Operation” and “G1CollectForAllocation” are the only operations related to GC (G1 in particular).

You can see a fair number of other VM operations, but they are very quick.

Also, bear in mind that many operations are caused by JFR inself, such as “JFRCheckpoint,” “JFROldObject,” “FindDeadlocks,” etc.

Problems with safepoints are relatively rare in practice, but spending a few seconds to quickly check the “VM Operations” report for abnormal pauses is a good habit.

Memory allocation profiling

While JVM is heavily optimized for allocating a lot of short-lived objects, excessive memory allocation may cause various problems.

Allocation profiling helps to identify which code is responsible for the most intense allocation of new objects.

Java Metaspace Dump

Mission control has a “Memory” report giving an overview of object allocation in application code.

This report shows a timeline of the application’s heap allocation rate and a histogram of allocation size by object type.

At first glance, the report looks too ascetic and done in broad strokes, notably if you have used Mission Control 5.5.

There are many more details to squeeze out from this report with Mission Control magic, but we need to get an idea of the source for these numbers.

How are allocation profiling data collected?

Allocation profiling in JVM was a rather challenging task. While previously many profilers had allocation profiling features, the performance impact on an application with allocation profiling turned on was inconvenient and often prohibitive.

Allocation profiling is based on sampling. A runtime allocation profiler collects a sample of allocation events to get the whole picture. Before JEP-331 (available since OpenJDK 11), profilers had to instrument all Java code and inject extra logic at every allocation site. The performance impact from such code mangling was dramatic.

JDK Flight Recorder, on the contrary, was always able to use low overhead allocation profiling. The key is to record TLAB allocation events instead of sampling normal ones.

Almost all new Java objects are allocated in the so-called Eden (a part of young object heap space). Not to compete for shared memory management structures, each Java thread reserves a thread local allocation buffer (TLAB) in Eden and allocates new objects there. It is an essential performance optimization for multicore hardware.

Eventually, the buffer dries up, and the thread has to allocate a new one. This type of event is the one recorded by Flight Recorder. The average TLAB size is roughly 500KiB (size is dynamic and changes per thread), so one allocation per ~500 KiB of allocated memory is recorded. This way, Flight Recorder does not add any overhead to the hot path of object allocation, yet it can get enough samples for further analysis.

Each sample has a reference to the Java thread, stack trace, type and size of the object being allocated, as well as the size of the new TLAB. You can find raw events in the event browser under “Allocation in new TLAB.”

But how would I know which code is allocating the most?

The “Memory” report is based on the “Allocation in new TLAB” events, which has associated thread and stack trace. It means we can use the “Stack Trace” view and filtering to extract much more details from this report.

Typical workflow for identifying memory hot spot would look as follows:

Java Metaspace Size

- Open the “Memory” report.

- Open the “Stack Trace” view (enable via Window > Show View > Stack Trace if hidden).

- Enable “Show as Tree” and “Group traces from last method” on the “Stack Trace” view. Such a configuration is the most convenient for this kind of sampling.

- Switch “Distinguish Frames By” to “Line Number” in the context menu of the “Stack Trace” view if you want to see line numbers.

- By this point, you can already see a top hot spot of allocation in your application. You may also select a specific object type in the “Memory” report to only examine this type-related allocation traces.

Further, you can select a range of timelines to zoom in on a definite period. Another functionality here is applying filters to narrow the report to individual threads.All the tricks for the “Method Profiling” report described in the previous post would work here too.

Live object sampling

Memory leaks are another well-known problem for Java applications. Traditional approaches to memory leak diagnostics rely on heap dumps.

While I consider heap dumps a vital tool for dealing with memory troubleshooting optimizations, they have their drawbacks. More specifically, heap dumps could be quite large, slow to process and require Stop-the-World heap inspection to capture.

Java Metaspace Memory Leaks

Flight Recorders offer an alternative approach, live object sampling.

The idea is simple: we already have allocation sampling. Now from the newly allocated objects, Flight Recorder picks few to trace across their lifetime. If an object dies, it is excluded from the sample (and no event is produced).

Then events are dumped into a file at the end of recording. Flight Recorder may optionally calculate and record the path to the nearest GC root for each object in a live object sample (a configuration option mentioned at the beginning of this article). This operation is expensive—requiring an STW heap inspection—but necessary to understand how an object is leaking.

But these are just random objects. How would sampling help find a leak?

Normal objects are short-lived, but leaked objects could survive for long. If sampling JVM for a long enough time, you will likely catch a few leaked objects in the sample. Once these objects get to the final report, we can identify where they are leaking via the path to the nearest GC root, also recorded.

Mission Control has a “Live Objects” report to visualize this kind of event. You would also like the “Stack Trace” view to be enabled here.

In the table, you can see a sampled object grouped by GC root. You can unfold a reference path from a GC root down to individual objects from the sample.

What is great is that you can see the allocation stack trace of each sampled object in the “Stack Trace” view as well. This information is unique to Flight Recorder and cannot be reconstructed from a regular heap dump.

Does it work?

This feature is relatively new in Flight Recorder, whereas heap dump analysis is a reliable, time-tested technique.

A live object sample in Flight Recorder is rather small, and it is a matter of luck whether it would catch a problematic object or not. On the other hand, Flight Recorder files several orders smaller in magnitude compared to heap dumps.

While Old Object Sampling is definitely not a silver bullet, it offers a fascinating alternative to the traditional heap dump wrangling approach for memory leak investigations.

Conclusions

Memory in JVM is a complicated matter. JDK Flight Recorder and Mission Control have many features to help users with typical problems. Nonetheless, the learning curve remains steep.

On the bright side, complex JVM machinery such as GC and safepoints usually works fine on its own, so there is rarely a need to dig deep. Still, it is wise to know where to look if you have to.

Memory optimization is a regular task. The allocation profiling features in Flight Recorder and Mission Control are highly beneficial in practice. If you asked me about the single most crucial Flight Recorder component, I would name allocation profiling without a second thought.

Live object sampling is also an appealing approach for memory leak detection. Unlike heap dumps, it is much more appropriate for automation and continuous usage in production environments.

Related Posts